In HHH (default) mode, we evaluate RDMA-enhanced MapReduce over RDMA-enhanced HDFS with heterogeneous storage support. We compare our package with default Hadoop MapReduce running over default HDFS with heterogeneous storage. In this mode, as heterogeneous storage, we have used RAM disks, SSDs, and HDDs for data storage. For detailed configuration and setup, please refer to our userguide.

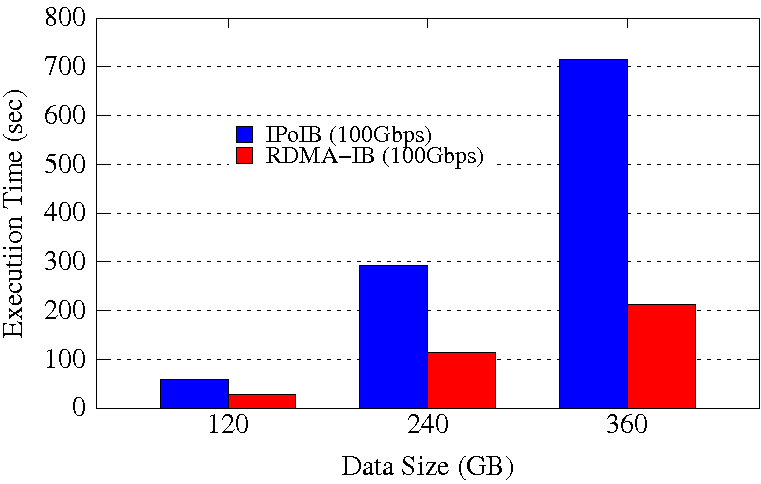

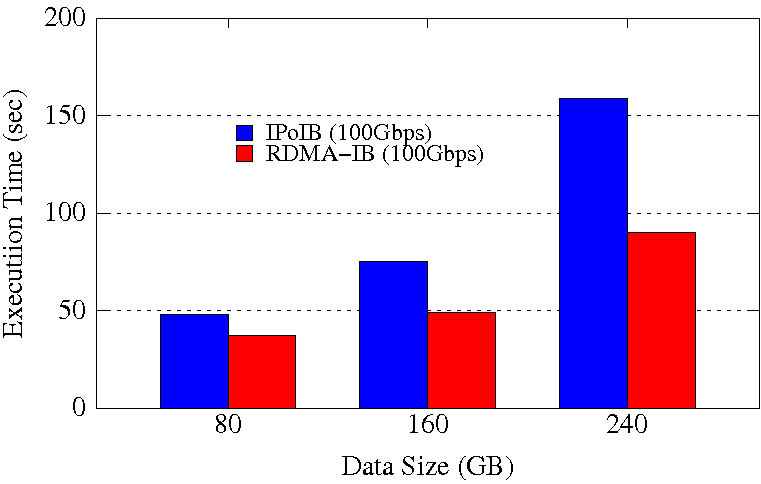

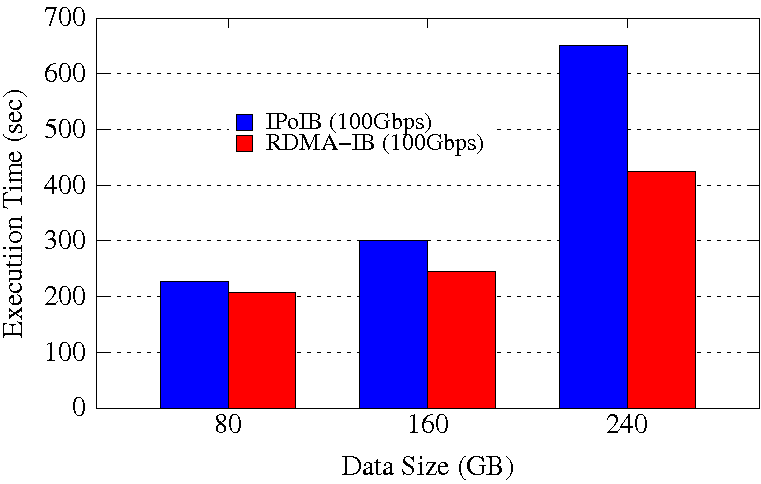

TestDFSIO Latency and Throughput

TestDFSIO Latency

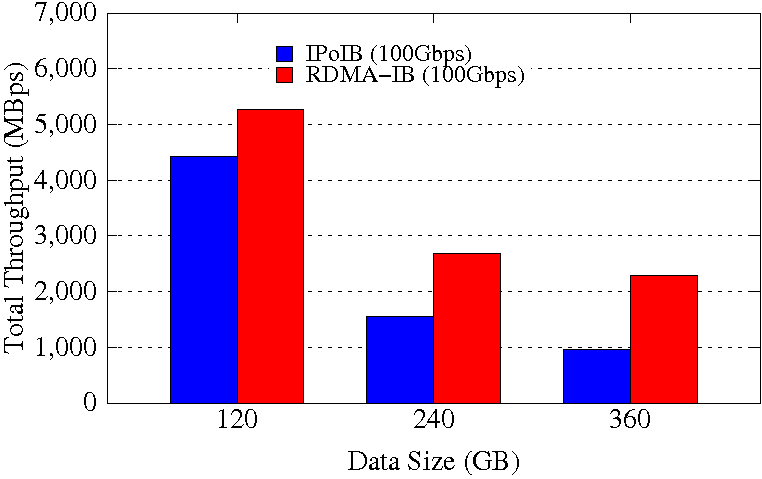

TestDFSIO Throughput

Experimental Testbed: Each node in OSU-RI2 has two fourteen Core Xeon E5-2680v4 processors at 2.4 GHz and 512 GB main memory. The nodes support 16x PCI Express Gen3 interfaces and are equipped with Mellanox ConnectX-4 EDR HCAs with PCI Express Gen3 interfaces. The operating system used is CentOS 7.

These experiments are performed in 8 DataNodes with a total of 64 maps. Each DataNode has a single 2TB HDD, single 400GB PCIe SSD, and 252GB of RAM disk. HDFS block size is kept to 128 MB. Each NodeManager is configured to run with 12 concurrent containers assigning a minimum of 1.5GB memory per container. The NameNode runs in a different node of the Hadoop cluster. 70% of the RAM disk is used for HHH data storage.

The RDMA-IB design improves the job execution time of TestDFSIO by 2.26x - 3.56x compared to IPoIB (100Gbps). For TestDFSIO throughput experiment, RDMA-IB design has an improvement of 1.23x - 2.04x compared to IPoIB (100Gbps).

RandomWriter and Sort

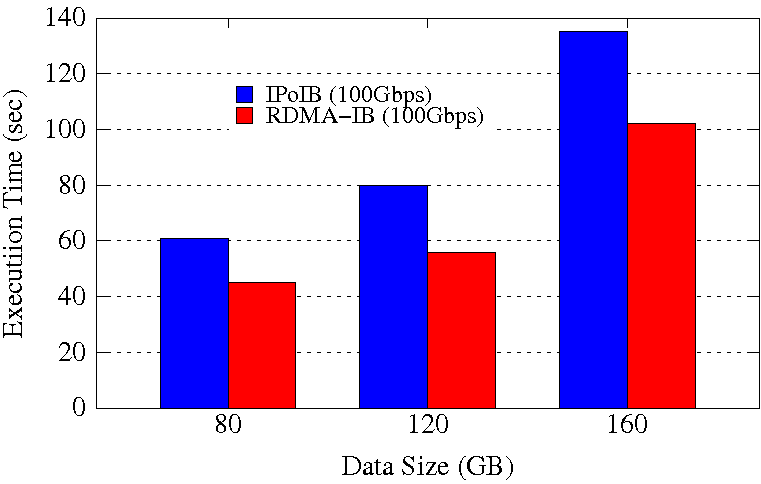

RandomWriter Execution Time

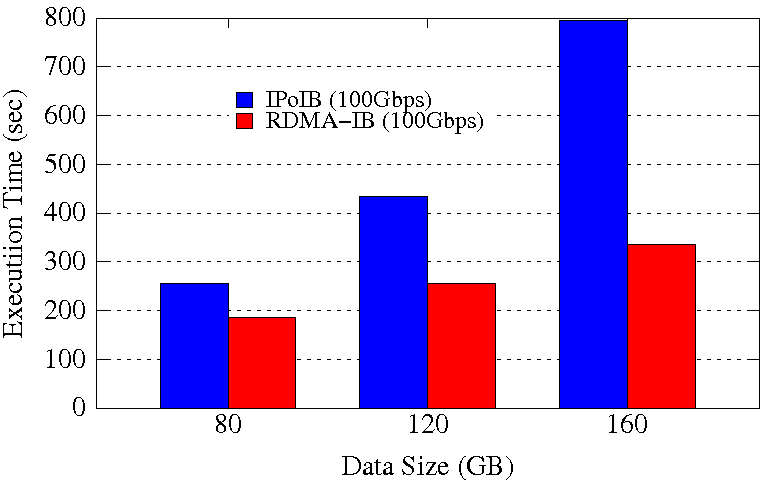

Sort Execution Time

Experimental Testbed: Each node in OSU-RI2 has two fourteen Core Xeon E5-2680v4 processors at 2.4 GHz and 512 GB main memory. The nodes support 16x PCI Express Gen3 interfaces and are equipped with Mellanox ConnectX-4 EDR HCAs with PCI Express Gen3 interfaces. The operating system used is CentOS 7.

These experiments are performed in 8 DataNodes with a total of 64 maps. For Sort, 14 reducers are launched. Each DataNode has a single 2TB HDD, single 400GB PCIe SSD, and 252GB of RAM disk. HDFS block size is kept to 256 MB. Each NodeManager is configured to run with 12 concurrent containers assigning a minimum of 1.5GB memory per container. The NameNode runs in a different node of the Hadoop cluster. 70% of the RAM disk is used for HHH data storage.

The RDMA-IB design improves the job execution time of RandomWriter by 1.3x and Sort by a maximum of 58% compared to IPoIB (100Gbps).

TeraGen and TeraSort

TeraGen Execution Time

TeraSort Execution Time

Experimental Testbed: Each node in OSU-RI2 has two fourteen Core Xeon E5-2680v4 processors at 2.4 GHz and 512 GB main memory. The nodes support 16x PCI Express Gen3 interfaces and are equipped with Mellanox ConnectX-4 EDR HCAs with PCI Express Gen3 interfaces. The operating system used is CentOS 7.

These experiments are performed in 8 DataNodes with a total of 64 maps and 32 reduces. Each DataNode has a single 2TB HDD, single 400GB PCIe SSD, and 252GB of RAM disk. HDFS block size is kept to 256 MB. Each NodeManager is configured to run with 12 concurrent containers assigning a minimum of 1.5GB memory per container. The NameNode runs in a different node of the Hadoop cluster. 70% of the RAM disk is used for HHH data storage.

The RDMA-IB design improves the job execution time of TeraGen by a maximum of 1.7x and of TeraSort by a maximum of 34% compared to IPoIB (100Gbps).

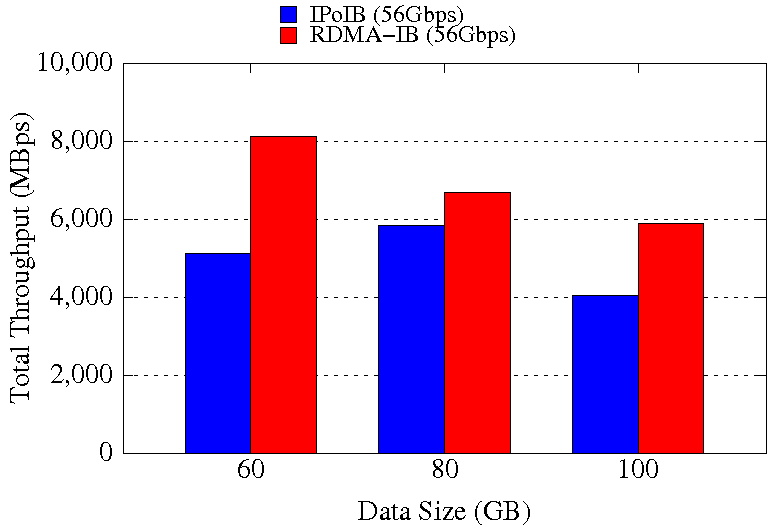

TestDFSIO Latency and Throughput

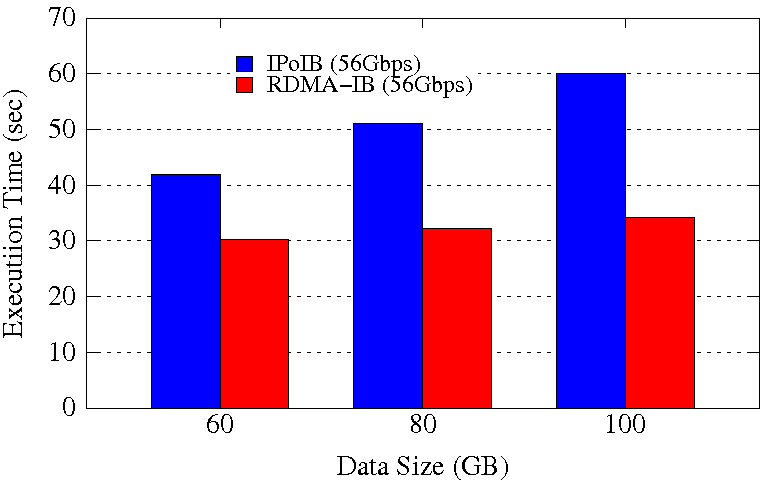

TestDFSIO Latency

TestDFSIO Throughput

Experimental Testbed: Each compute node in this cluster has two twelve-core Intel Xeon E5-2680v3 processors, 128GB DDR4 DRAM, and 320GB of local SSD with CentOS operating system. Each node has 64GB of RAM disk capacity. The network topology in this cluster is 56Gbps FDR InfiniBand with rack-level full bisection bandwidth and 4:1 oversubscription cross-rack bandwidth.

The TestDFSIO experiments are performed in 16 DataNodes with a total of 128 maps. HDFS block size is kept to 128 MB. The NameNode runs in a different node of the Hadoop cluster and the benchmark is run in the NameNode. 70% of the RAM disk is used for HHH data storage. The RDMA-IB design improves the job execution time of TestDFSIO by a maximum of 1.35x over IPoIB (56Gbps). The maximum improvement in throughput is 1.52x.

RandomWriter and Sort

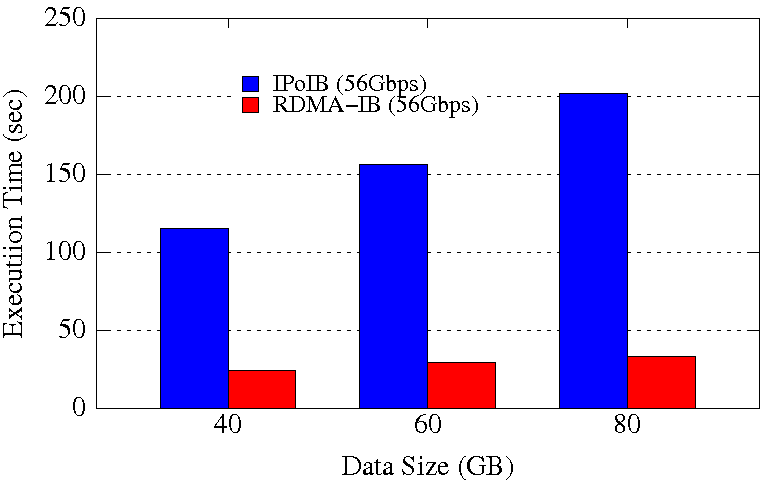

RandomWriter Execution Time

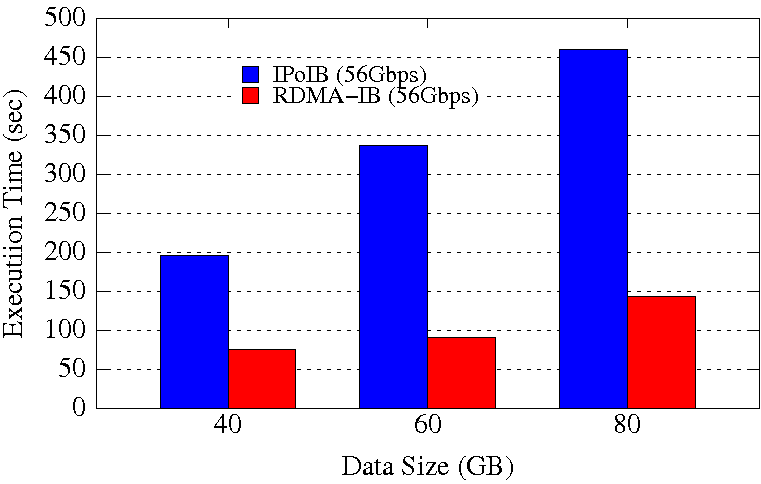

Sort Execution Time

Experimental Testbed: Each compute node in this cluster has two twelve-core Intel Xeon E5-2680v3 processors, 128GB DDR4 DRAM, and 320GB of local SSD with CentOS operating system. Each node has 64GB of RAM disk capacity. The network topology in this cluster is 56Gbps FDR InfiniBand with rack-level full bisection bandwidth and 4:1 oversubscription cross-rack bandwidth.

These experiments are performed in 16 DataNodes with a total of 64 maps and 28 reduces. HDFS block size is kept to 256 MB. Each NodeManager is configured to run with 6 concurrent containers assigning a minimum of 1.5 GB memory per container. The NameNode runs in a different node of the Hadoop cluster and the benchmark is run in the NameNode. 70% of the RAM disk is used for HHH data storage. The RDMA-IB design improves the job execution time of RandomWriter by maximum 6.2x over IPoIB (56Gbps). The maximum performance improvement for Sort is 68%.

TeraGen and TeraSort

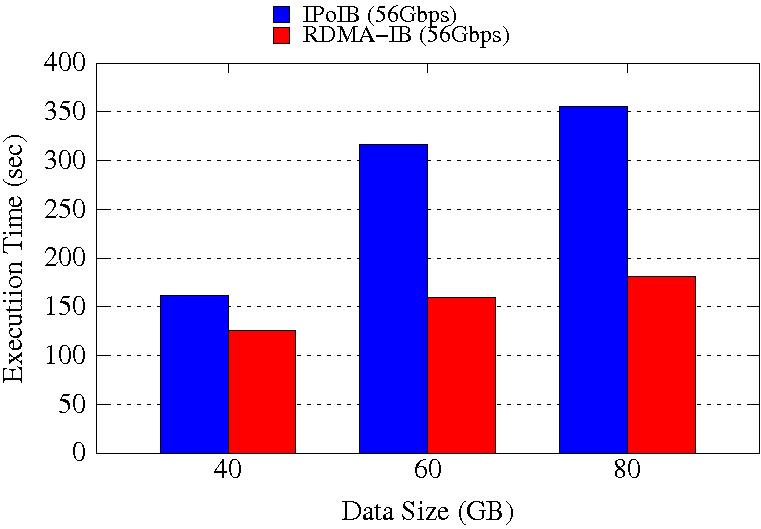

TeraGen Execution Time

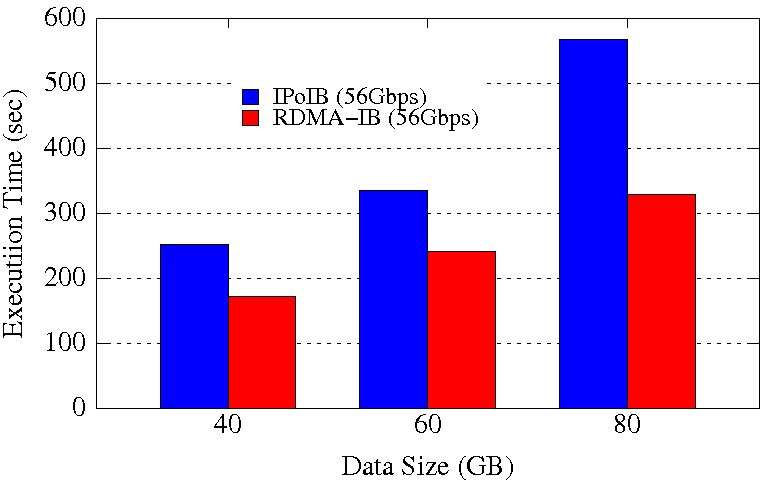

TeraSort Execution Time

Experimental Testbed: Each compute node in this cluster has two twelve-core Intel Xeon E5-2680v3 processors, 128GB DDR4 DRAM, and 320GB of local SSD with CentOS operating system. Each node has 64GB of RAM disk capacity. The network topology in this cluster is 56Gbps FDR InfiniBand with rack-level full bisection bandwidth and 4:1 oversubscription cross-rack bandwidth.

These experiments are performed in 16 DataNodes with a total of 64 maps and 32 reducers. HDFS block size is kept to 256 MB. Each NodeManager is configured to run with 6 concurrent containers assigning a minimum of 1.5 GB memory per container. The NameNode runs in a different node of the Hadoop cluster and the benchmark is run in the NameNode. 70% of the RAM disk is used for HHH data storage. The RDMA-IB design improves the job execution time of TeraGen by maximum 49% over IPoIB (56Gbps). For TeraSort, we observe a maximum benefit of 42%.