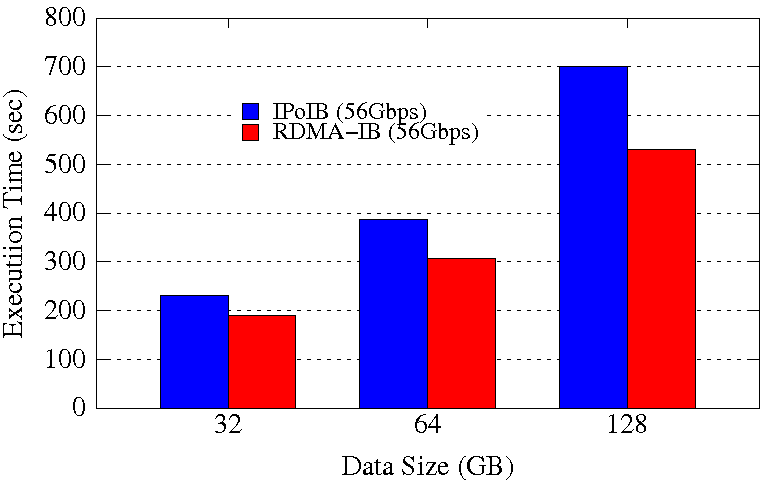

HiBench Sort Execution Time on 32 Worker Nodes / 768 Cores

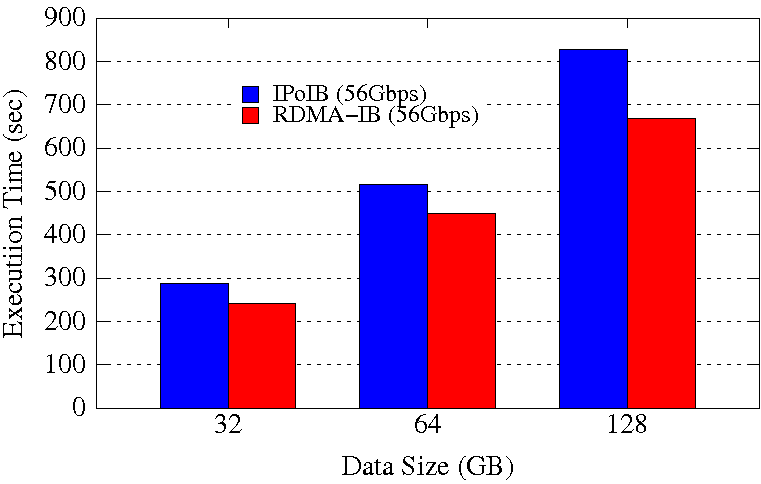

HiBench TeraSort Execution Time on 32 Worker Nodes / 768 Cores

Experimental Testbed: Each compute node in this cluster has two twelve-core Intel Xeon E5-2680v3 processors, 128GB DDR4 DRAM, and 320GB of local SSD with CentOS operating system. The network topology in this cluster is 56Gbps FDR InfiniBand with rack-level full bisection bandwidth and 4:1 oversubscription cross-rack bandwidth.

These experiments are performed on 32 Worker Nodes with a total of 768 maps and 768 reduces so that the job is run with full subscription on 768 Cores in the cluster. Spark is run in Standalone mode. Configuration used is spark_worker_memory=96GB, spark_worker_cores=24, spark_executor_instances=1, spark_driver_memory=2GB. SSD is used for Spark Local and Work data.

The RDMA-IB design improves the average job execution time of HiBench Sort by 18%-24% and HiBench TeraSort by 12%-19% compared to IPoIB (56Gbps).

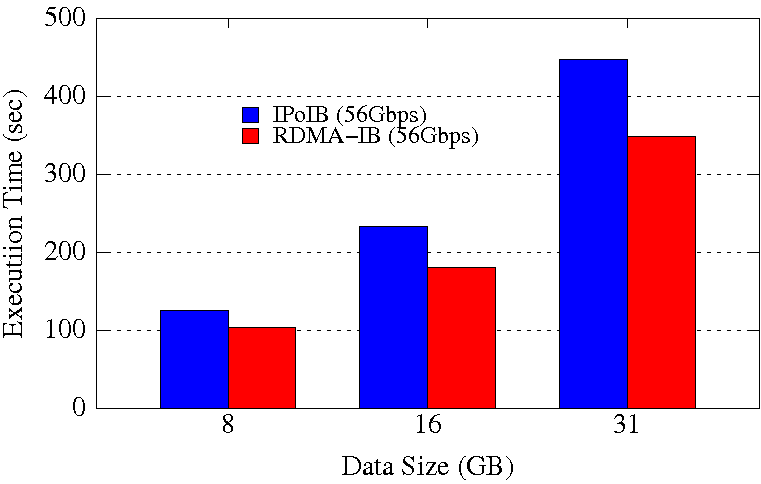

HiBench Sort Execution Time on 8 Worker Nodes / 192 Cores

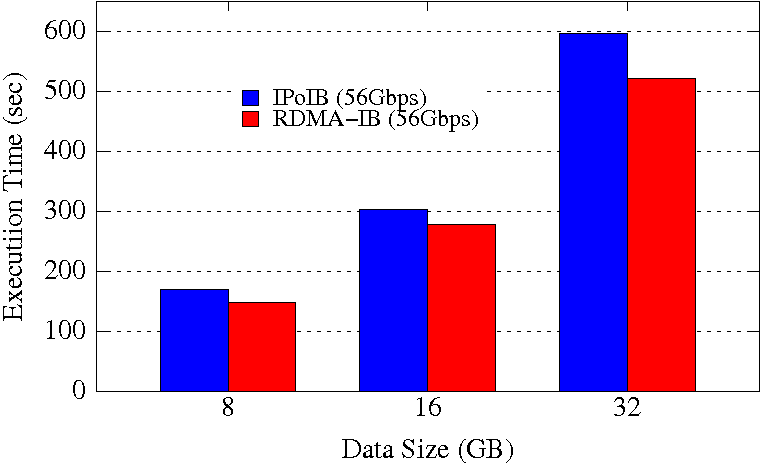

HiBench TeraSort Execution Time on 8 Worker Nodes / 192 Cores

Experimental Testbed: Each compute node in this cluster has two twelve-core Intel Xeon E5-2680v3 processors, 128GB DDR4 DRAM, and 320GB of local SSD with CentOS operating system. The network topology in this cluster is 56Gbps FDR InfiniBand with rack-level full bisection bandwidth and 4:1 oversubscription cross-rack bandwidth.

These experiments are performed on 8 Worker Nodes with a total of 192 maps and 192 reduces so that the job is run with full subscription on 192 Cores in the cluster. Configuration used is spark_worker_memory=96GB, spark_worker_cores=24, spark_executor_instances=1, spark_driver_memory=2GB. SSD is used for Spark Local and Work data.

The RDMA-IB design improves the average job execution time of HiBench Sort by 17%-23% and HiBench TeraSort by 8%-13% compared to IPoIB (56Gbps).