There are five HDFS micro-benchmarks: (1) Sequential Write Latency (SWL), (2) Sequential Read Latency (SRL), (3) Random Read Latency (RRL), (4) Sequential Write Throughput (SWT), and (5) Sequential Read Throughput (SRT). For detailed configuration and setup, please refer to our userguide.

HDFS Sequential Write and Read Latency

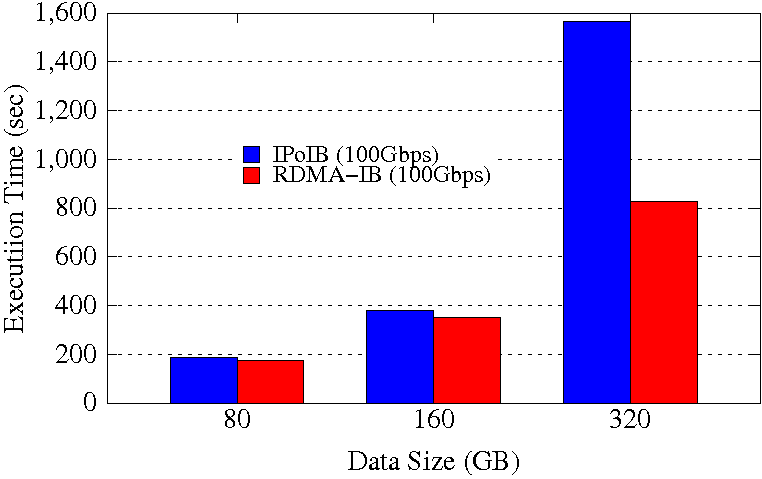

Sequential Write Latency (SWL)

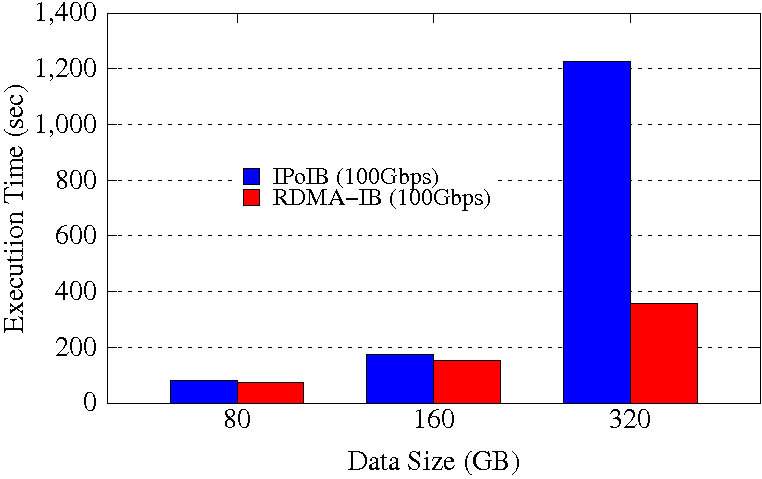

Sequential Read Latency (SRL)

Experimental Testbed: Each node of our testbed has two 4-core 2.53 GHz Intel Xeon E5630 (Westmere) processors and 24 GB main memory. The nodes support 16x PCI Express Gen2 interfaces and are equipped with Mellanox ConnectX QDR HCAs with PCI Express Gen2 interfaces. The operating system used was RedHat Enterprise Linux Server release 6.1 (Santiago).

These experiments are run on 4 DataNodes. Each DataNode has a single 2TB HDD, single 400GB PCIe SSD, and 252GB of RAM disk. HDFS block size is kept to 128 MB. The NameNode runs in a different node of the Hadoop cluster and the benchmark is run in the NameNode. The RDMA-IB design improves the job execution time of SWL by up to 47% over IPoIB (100Gbps). For SRL, it provides a maximum of 68.7% improvement over IPoIB (100Gbps).

HDFS Sequential Write and Read Throughput

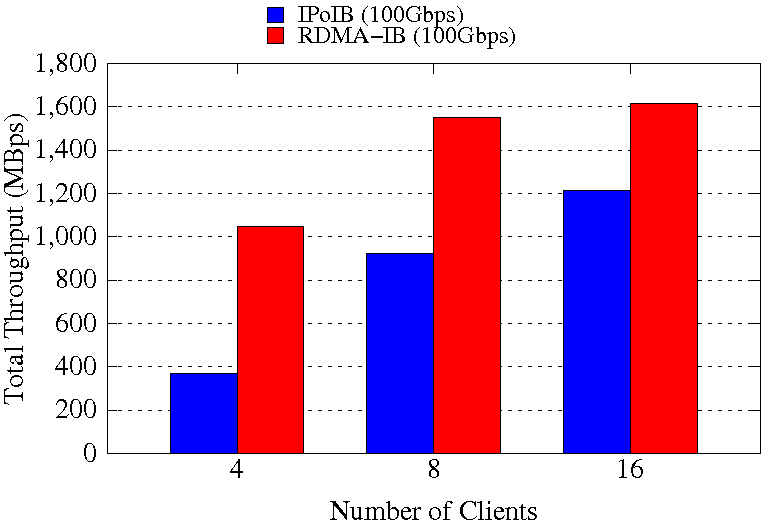

Sequential Write Throughput (SWT)

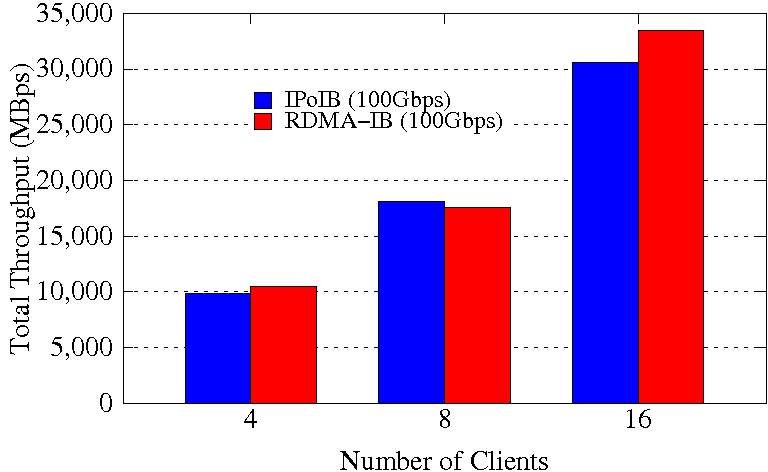

Sequential Read Throughput (SRT)

Experimental Testbed: Each node of our testbed has two 4-core 2.53 GHz Intel Xeon E5630 (Westmere) processors and 24 GB main memory. The nodes support 16x PCI Express Gen2 interfaces and are equipped with Mellanox ConnectX QDR HCAs with PCI Express Gen2 interfaces. The operating system used was RedHat Enterprise Linux Server release 6.1 (Santiago).

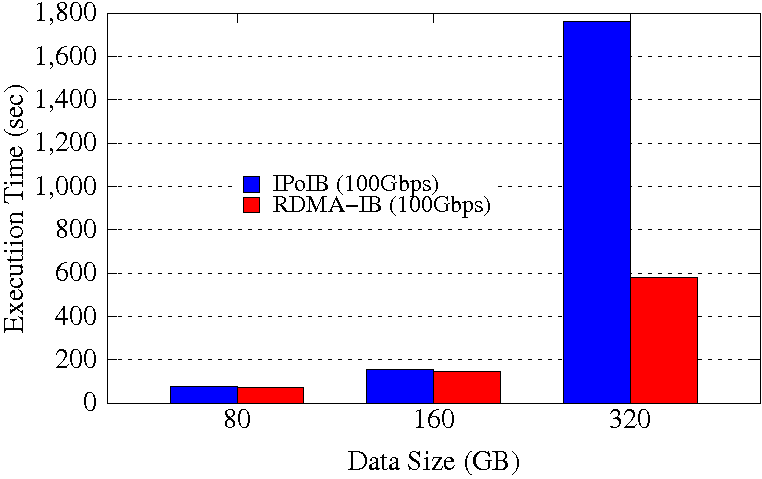

These experiments are run on 4 DataNodes. Each DataNode has a single 2TB HDD, single 400GB PCIe SSD, and 252GB of RAM disk. HDFS block size is kept to 128 MB. The NameNode runs in a different node of the Hadoop cluster and the benchmark is run in the NameNode. The RDMA-IB design improves the job execution time of SWT by up to 65% over IPoIB (100Gbps). For SRT, it provides a maximum of 10% improvement over IPoIB (100Gbps).

HDFS Random Read Latency

Random Read Latency (RRL)

Experimental Testbed: Each node of our testbed has two 4-core 2.53 GHz Intel Xeon E5630 (Westmere) processors and 24 GB main memory. The nodes support 16x PCI Express Gen2 interfaces and are equipped with Mellanox ConnectX QDR HCAs with PCI Express Gen2 interfaces. The operating system used was RedHat Enterprise Linux Server release 6.1 (Santiago).

These experiments are run on 4 DataNodes. Each DataNode has a single 2TB HDD, single 400GB PCIe SSD, and 252GB of RAM disk. HDFS block size is kept to 128 MB. The NameNode runs in a different node of the Hadoop cluster and the benchmark is run in the NameNode. The RDMA-IB design improves the job execution time of RRL by up to 67% over IPoIB (100Gbps).