In MapReduce over Lustre mode, we illustrate on two different configurations that we can use to evaluate this setup. Lustre is used to provide both input and output data directories. However, for intermediate data, either local disks or Lustre itself can be used. We evaluate our package with both setup and provide performance comparisons here. For detailed configuration and setup, please refer to our userguide.

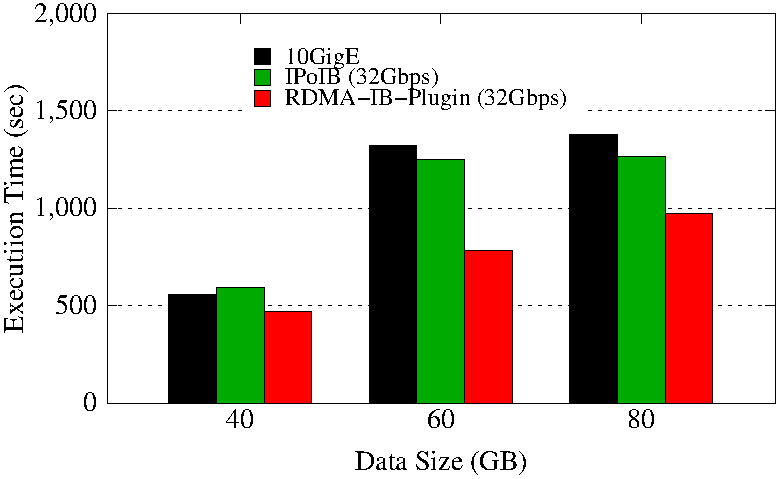

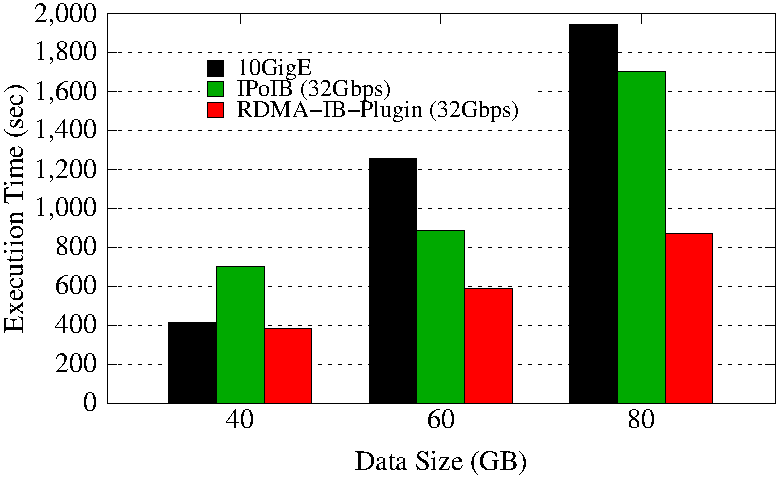

MapReduce over Lustre (with local disks)

TeraSort Execution Time

Sort Execution Time

Experimental Testbed: Each node in OSU-RI has two 4-core 2.53 GHz Intel Xeon E5630 (Westmere) processors and 24 GB main memory. The nodes support 16x PCI Express Gen2 interfaces and are equipped with Mellanox ConnectX QDR HCAs with PCI Express Gen2 interfaces. The operating system used was RedHat Enterprise Linux Server release 6.4 (Santiago). Lustre installation in this cluster has a capacity of 12TB and accessible through IB QDR.

These experiments are performed in 8 nodes with a total of 32 maps. For TeraSort, 16 reducers are launched; Sort runs with 14 reducers. Each node has a single 160GB HDD, which is configured as intermediate data directory. Lustre stripe size is configured to 256 MB, which is equivalent to file system block size used in MapReduce. Each NodeManager is configured to run with 6 concurrent containers assigning a minimum of 1.5 GB memory per container.

The RDMA-IB design improves the job execution time of Sort by 34% - 49% and TeraSort by 21% - 37% compared to IPoIB (32Gbps). Compared to 10GigE, the improvement is 8% - 55% in Sort and 15% - 41% in TeraSort.

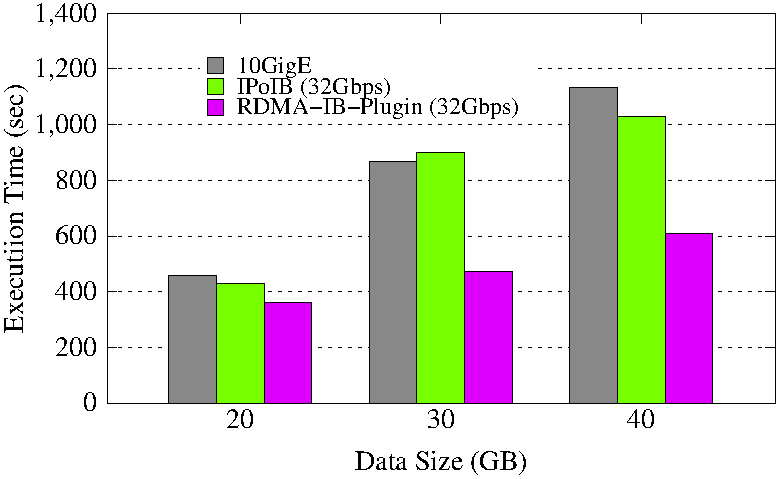

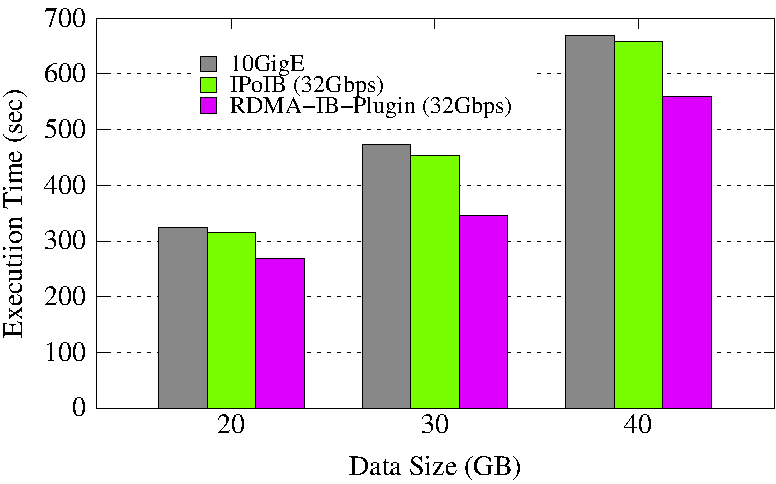

MapReduce over Lustre (w/o local disks)

TeraSort Execution Time

Sort Execution Time

Experimental Testbed: Each node in OSU-RI has two 4-core 2.53 GHz Intel Xeon E5630 (Westmere) processors and 24 GB main memory. The nodes support 16x PCI Express Gen2 interfaces and are equipped with Mellanox ConnectX QDR HCAs with PCI Express Gen2 interfaces. The operating system used was RedHat Enterprise Linux Server release 6.4 (Santiago). Lustre installation in this cluster has a capacity of 12TB and accessible through IB QDR.

These experiments are performed in 8 nodes with a total of 32 maps. For TeraSort, 16 reducers are launched; Sort runs with 14 reducers. Lustre stripe size is configured to 256 MB, which is equivalent to file system block size used in MapReduce. Lustre is configured as both input-output and intermediate data directory. Each NodeManager is configured to run with 6 concurrent containers assigning a minimum of 1.5 GB memory per container.

The RDMA-IB design improves the job execution time of Sort by a maximum of 24% and of TeraSort by a maximum of 47% compared to IPoIB (32Gbps). Compared to 10GigE, this improvement is 27% in Sort and 46% in TeraSort.